Why did Xavier & Micky overhaul HHS's ASTP/ONC right before Trump's inauguration?

Also, the key takeaway from Biden's final conference on AI in healthcare: certified health IT or not, doctors still go to jail.

Welcome to AI Health Uncut, a brutally honest newsletter on AI, innovation, and the state of the healthcare market. If you’d like to sign up to receive issues over email, you can do so here.

While America crowns its next leader today and reflects on Martin Luther King Jr.’s legacy, you might wonder why on earth I’m focused on Biden’s last word on AI in healthcare.

Well, strong government support for AI advancements in healthcare is vital, and I can only hope the Trump administration understands its importance. But certain developments from Biden’s final AI conference give me confidence that AI in healthcare is here to stay, no matter which party is in power.

Let me share some insights from this historic conference—because, let’s face it, I went so you didn’t have to. 😉

As the name suggests, the “AI in Healthcare Safety Program” conference was centered on AI safety. What stood out to me was the diverse mix of government, non-profit, advocacy, and academic presenters. It wasn’t just another echo chamber.

Here’s a takeaway that’s as shocking as it is telling (or is it?), straight from the ASTP/ONC (Assistant Secretary for Technology Policy and the Office of the National Coordinator for Health Information Technology) presentation:

Whether a medical provider buys certified or non-certified tech, if that product leads to a patient’s death, it’s the doctor who faces jail time—not the vendor.

(Yes, this absolutely echoes the premise of my article, “Doctors Go to Jail. Engineers Don’t.”—which, by the way, has been reprinted in several outlets, including The American Journal of Healthcare Strategy.)

TL;DR:

1. Why the F*ck Was ASTP/ONC Reshuffled Right Before Trump’s Presidency? What Was the Point?

2. ONC Health IT Certification Program and “AI Nutrition Labels”

3. CHAI (Coalition for Health AI): Building Responsible AI for Equitable Health Outcomes

4. AQIPS PSO Members’ Use of AI

5. Advancing Patient Safety Through AI: HQI’s Vision for Zero Harm and Data-Driven Care

6. Ferrum Health National Patient Safety Organization

7. AHRQ Updates: AI in Healthcare Safety Program

8. My Rebuttal

9. Conclusion

10. Coming up Soon: My Hippocratic AI Investigation

1. Why the F*ck Was ASTP/ONC Reshuffled Right Before Trump’s Presidency? What Was the Point?

With the utmost respect—especially for Micky—WTF is going on? You introduced the massive ASTP/ONC overhaul back in July, fully aware it would take months to implement. Did it never cross your minds, even slightly, to consider the possibility of Trump’s victory? What will happen to all these new hires? You’re moving on, but do you honestly think Trump will keep these new hires—likely exceptional public servants—who trusted you with their futures?

Apologies for the strong language, but I am at a loss for words. 🤯

Of course, I’m talking about two of President Biden’s key appointees: Xavier Becerra, Secretary of the U.S. Department of Health and Human Services (HHS), and Micky Tripathi, PhD, MPP, Assistant Secretary for Technology Policy and head of the Office of the National Coordinator for Health Information Technology (ASTP/ONC).

In my opinion, the HHS—specifically the Assistant Secretary for Technology Policy and the Office of the National Coordinator for Health Information Technology (ASTP/ONC) under the Biden Administration—assembled a solid team of tech experts. I’m not sure how Trump would handle that office, but I’ve learned to appreciate the current direction, particularly the strong emphasis on AI expertise. Micky Tripathi’s term was impactful, with a clear focus on advancing new technologies and integrating AI.

How do I know some of these details? I applied for the newly created Chief Artificial Intelligence Officer (CAIO) position at HHS/ONC and, well, didn’t get the job.

Last week(!), the ASTP/ONC announced the completion of its months-long departmental “reshuffle,” introducing three new senior positions under the brand-new Office of the Chief Technology Officer: Chief Technology Officer (CTO), Chief Data Officer (CDO), and Chief Artificial Intelligence Officer (CAIO). All this was done in the name of diversity, equity, and inclusion (DEI).

Back in the summer of 2024, when talk of this reshuffle began, I couldn’t help but wonder, “What if Trump wins?” They’ve created three new positions and hired an entire department—because it’s not just three heads. There are several junior roles under them—for what? A few weeks of work if the administration changes? If Trump takes office, he could logically decide to eliminate these roles as part of his Musk-led government efficiency agenda. What happens to all the people who just started their first day on the job?

Is this some kind of “reverse pardon”—a way to pad résumés, even if only for a few days? Seriously, what were they thinking? With Donald Trump and Elon Musk stepping in now, you can bet they’ll have something to say about AI in healthcare and whether these changes were even necessary. Maybe not on day one, but soon.

What a mess.

Now, we wait to see who will replace Messrs. Becerra and Tripathi. It’s going to be interesting. ⏳

Anyway, shifting gears back to the conference—here’s the agenda:

2. ONC Health IT Certification Program and “AI Nutrition Labels”

Jeffery Smith, MPP

Jordan Everson, PhD, MPP

The ONC presentation highlights the U.S. Health IT Certification Program as the backbone of the nation’s digital health infrastructure. While participation is voluntary, over 96% of hospitals and 80% of clinical offices utilize certified products. The program sets baseline standards for data and interoperability. A key takeaway is the revision of Clinical Decision Support (CDS) certification into Decision Support Interventions (DSI) to accommodate AI’s role in electronic health records (EHRs).

From January 1, 2025, certified developers are required to provide “nutrition labels” for AI technologies. Though met with some skepticism by the medical community, this initiative aligns with principles of fairness, validity, effectiveness, and safety (FAVES). Developers assume stewardship of Predictive DSI embedded in their modules, but medical providers remain entirely responsible for patient outcomes, including deaths caused by faulty AI recommendations, which could expose providers to criminal liability if negligence is proven. Vendors are notably shielded unless explicitly found at fault.

3. CHAI (Coalition for Health AI): Building Responsible AI for Equitable Health Outcomes

Brian Anderson, MD , Chief Executive Officer, CHAI

CHAI’s framework focuses on equitable AI use and transparency. By assembling diverse stakeholders, including patients, medical institutions, startups, and government agencies, it seeks to create a gold-standard system for responsible AI deployment in healthcare.

Key initiatives include:

Establishing Assurance Labs to evaluate AI performance.

Introducing national registries with model report cards and “AI Nutrition Labels.”

Developing standards for addressing AI biases and ensuring safety.

The generative AI landscape in healthcare has rapidly evolved to meet the increasing demands of health systems, payers, and life science companies, emphasizing democratized deployment through equitable testing and evaluation. However, this progress has highlighted challenges like the digital divide and the need for inclusive data sets, systematic validation, and transparent deployment frameworks.

CHAI acknowledges challenges, particularly the growing digital divide and lack of universal regulation for health AI. The emphasis on consensus-building for equitable testing is critical to bridging these gaps. Still, concerns remain about real-world enforcement and long-term surveillance of deployed systems.

4. AQIPS PSO Members’ Use of AI

Peggy Binzer, JD Executive Director Alliance for Quality Improvement and Patient Safety (AQIPS)

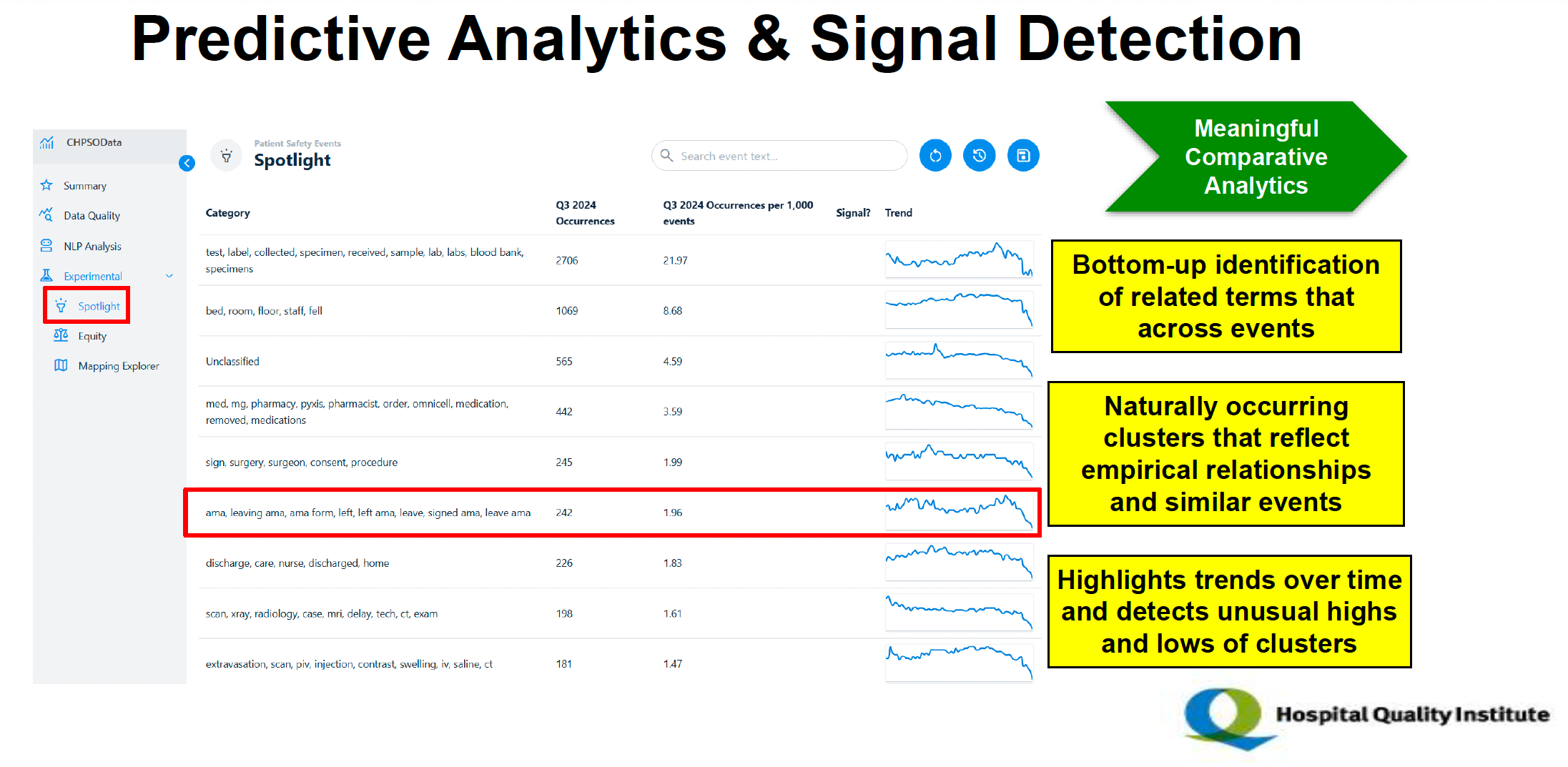

The Alliance for Quality Improvement and Patient Safety (AQIPS) supports over 60 Patient Safety Organizations (PSOs) using AI for tasks like fall prediction, data analysis, and event categorization. Training emphasizes safe AI use and avoiding misuse like sharing sensitive data. Key PSOs showcase AI for clinical gaps, taxonomy enhancements, and predictive analytics at conferences and demonstrations. The Hospital Quality Institute (HQI) leverages AI for improving data quality, reporting, and comparative analytics.

5. Advancing Patient Safety Through AI: HQI’s Vision for Zero Harm and Data-Driven Care

Robert Imhoff, President of the Hospital Quality Institute (HQI)

Scott Masten, PhD, VP Measurement Science & Data Analytics, HQI

The HQI (Hospital Quality Institute) presentation emphasized the organization’s efforts to enhance patient safety and care quality through innovative approaches, particularly artificial intelligence (AI). Key highlights included:

Mission and Scope: HQI, a nonprofit part of the California Hospital Association, aimed to support its members in achieving zero harm and advancing patient safety through collaborative efforts with 495 member hospitals across 21 states.

AI Integration in Patient Safety: HQI leveraged AI and natural language processing (NLP) for:

Streamlined Event Reporting: Automated data mapping retained historical event uploads and reduced manual input through pre-mapped variables.

Data Categorization: Enhanced taxonomy expanded from 10 to 45+ event categories, recategorizing ambiguous “Other” events.

Comparative Analytics: Standardized and cleaned data facilitated valid comparisons across facilities and highlighted trends, outliers, and performance differences.

Predictive Analytics: AI identified clusters of related safety events and trends, enabling proactive harm reduction.

Focus on Equity: HQI incorporated race and ethnicity in data analysis to address health disparities.

Impact and Demonstrations: HQI showcased AI’s ability to improve data quality, reduce harm, and provide actionable insights through a live demonstration of the Collaborative Healthcare Patient Safety Organization (CHPSO) platform.

AI’s Limitations. Patient Safety Organizations (PSOs) are advised to train staff to recognize AI’s limitations, such as risks of data sharing and algorithmic inaccuracies. Advocate Health PSO notably warned against using AI for drafting sensitive documents or handling personal health information due to privacy vulnerabilities.

6. Ferrum Health National Patient Safety Organization

Peter Eason, Vice President, Finance and Operations, Ferrum Health National Patient Safety Organization

Ferrum leverages AI to enhance diagnostic accuracy in radiology by reviewing imaging reports and surfacing discrepancies for further evaluation.

Ferrum PSO Role: Ferrum Health National Patient Safety Organization (PSO) supports healthcare providers by using AI to identify potential discrepancies in radiological images, acting as a safety net.

AI Integration: Ferrum combines visual classifiers (for images) and language models (for text) to enhance radiology workflows, surfacing overlooked findings for further review.

Real-World Impact: The AI system has helped flag critical findings that led to timely follow-ups and improved patient outcomes.

Data Insights: The system analyzes trends, such as discrepancies based on the time of day, and uses this data to guide focused training opportunities for healthcare providers.

Patient Safety Work Product: The use of AI has resulted in thousands of clinical interventions and ongoing improvements in patient care across multiple sites.

7. AHRQ Updates: AI in Healthcare Safety Program

Andrea Timashenka, JD, Director, PSO Division, Center for Quality Improvement and Patient Safety, AHRQ

The Agency for Healthcare Research and Quality (AHRQ) provides updates on its AI in Healthcare Safety Program, highlighting efforts to enhance patient safety in healthcare settings where AI is implemented. Key initiatives include:

Developing a safety program to identify, track, and reduce AI-related clinical errors as mandated by Executive Order 14110. This includes creating a framework for identifying harm caused by algorithms and providing guidelines to address these issues.

Collaborating with stakeholders such as Patient Safety Organizations, federal agencies like the Department of Defense and the Department of Veterans Affairs, researchers, and software vendors to refine data collection, analyze safety incidents, and disseminate best practices.

Defining AI-related safety events broadly as harm or potential harm from unintended algorithm behavior or healthcare staff misuse of AI technologies.

Utilizing the National Patient Safety Database (NPSD) to aggregate and analyze data on AI-related incidents nationally.

Offering resources such as educational briefs, evidence reviews on algorithm bias, and funding opportunities to examine AI’s impact on healthcare, with a focus on addressing racial and ethnic disparities in care.

Note: Those who are interested in presentation slides, I’m happy to share. For some reason, I can’t find those online, but I have them on my computer.

8. My Rebuttal

1️⃣ As Always, Doctors Go to Jail, Engineers Don’t: It was only by asking a direct question during the Q&A session of the ONC presentation that I confirmed that medical providers remain fully liable for AI-driven errors, while vendors benefit from significant immunity. This disparity discourages providers from adopting innovative but potentially risky AI tools. Policymakers must address this imbalance to promote shared accountability.

2️⃣ Transparency and Equity: The introduction of “AI nutrition labels” and Assurance Labs by ONC and CHAI is a step toward transparency. However, the effectiveness of these measures depends on widespread adoption and rigorous enforcement. CHAI’s emphasis on equitable testing frameworks could mitigate biases but lacks details on how underrepresented groups will benefit.

3️⃣ Safety and Real-World Validation: AI’s potential for harm, particularly through biased or erroneous algorithms, is a recurring theme. PSOs play a critical role in validating AI tools post-deployment. However, systemic safeguards, such as AHRQ’s central tracking repository, must be operationalized to ensure that lessons from AI failures lead to meaningful reforms.

4️⃣ Regulatory Gaps: Despite advances in defining AI safety events and setting standards, the absence of a universal regulatory framework leaves gaps in implementation. The presentations advocate for collaboration among federal and private entities, but enforcement mechanisms remain vague.

9. Conclusion

The outgoing Biden administration appears to be leaving many HHS ASTP/ONC employees in a vulnerable position. That’s simply not right.

On the other hand, the spirit of the final Biden’s conference on healthcare AI collectively underscores the transformative potential of AI in healthcare, tempered by significant ethical, legal, and practical challenges. While efforts to improve transparency, safety, and equity in American healthcare are commendable, the disproportionate burden placed on medical providers to bear ultimate responsibility for AI-related outcomes highlights an urgent need for reforms to ensure fair accountability.

Put simply, as of Inauguration Day 2025: doctors still go to jail, engineers don’t.

10. Coming up Soon: My Hippocratic AI Investigation

Oh boy, let me tell you—I’ve been so deep into the tangled web that is Hippocratic AI. The investigation isn’t quite finished yet. I’m still editing, processing, analyzing data, poring over technical docs, and, of course, sifting through interviews with former employees and business partners. Spoiler alert: it’s messy. Very messy.

But don’t worry—unless I meet an “accidental” end, the full story is coming next week. Stay tuned…

In the meantime, here’s a little homework for you: take a look at what I’ve already written about Hippocratic AI and 14 other AI health liars. Let me know what you think.

These 15 Health AI Companies Have Been Lying About What Their AI Can Do (Part 1 of 2)

15 Health AI Liars Exposed—Including One That Just Raised $70M at a $0.5B Valuation (Part 2 of 2)

👉👉👉👉👉 Hi! My name is Sergei Polevikov. I’m an AI researcher and a healthcare AI startup founder. In my newsletter ‘AI Health Uncut,’ I combine my knowledge of AI models with my unique skills in analyzing the financial health of digital health companies. Why “Uncut”? Because I never sugarcoat or filter the hard truth. I don’t play games, I don’t work for anyone, and therefore, with your support, I produce the most original, the most unbiased, the most unapologetic research in AI, innovation, and healthcare. Thank you for your support of my work. You’re part of a vibrant community of healthcare AI enthusiasts! Your engagement matters. 🙏🙏🙏🙏🙏

Interesting post. Yes, the new hires are vulnerable, but one of them worked in the last Trump administration, so we’ll see. I wish, though, that you had covered more deeply the issue of CHAI and regulatory capture. There are lots of people in this space who are experienced healthcare folk who disagree with CHAIs approach. Lastly, it’s important to remember the scope of ASTP/ONC regulatory authority, which is certified EHRs. They don’t regulate AI developed by health systems when that AI is not for sale to others, and I suspect their authority over AI developed by health systems for sale, or over providers who use their own data stores to develop AI outside of a certified EHR would certainly be questionable under Loper Bright.

And of course, a manufacturer of AI that is a device would certainly be liable under FDA standards.

I think it’s good the PSOs are involved—that’s the only way to elicit accurate information so improvements can be made, but the staffs at the PSOs will have to up their tech knowledge significantly