OpenAI Concedes: We're Only at Level 1 of AI Progress, Far from AGI

Sam Altman has unfortunately lost the AGI debate to Yann LeCun and Gary Marcus.

Welcome to AI Health Uncut, a brutally honest newsletter on AI, innovation, and the state of the healthcare market. If you’d like to sign up to receive issues over email, you can do so here.

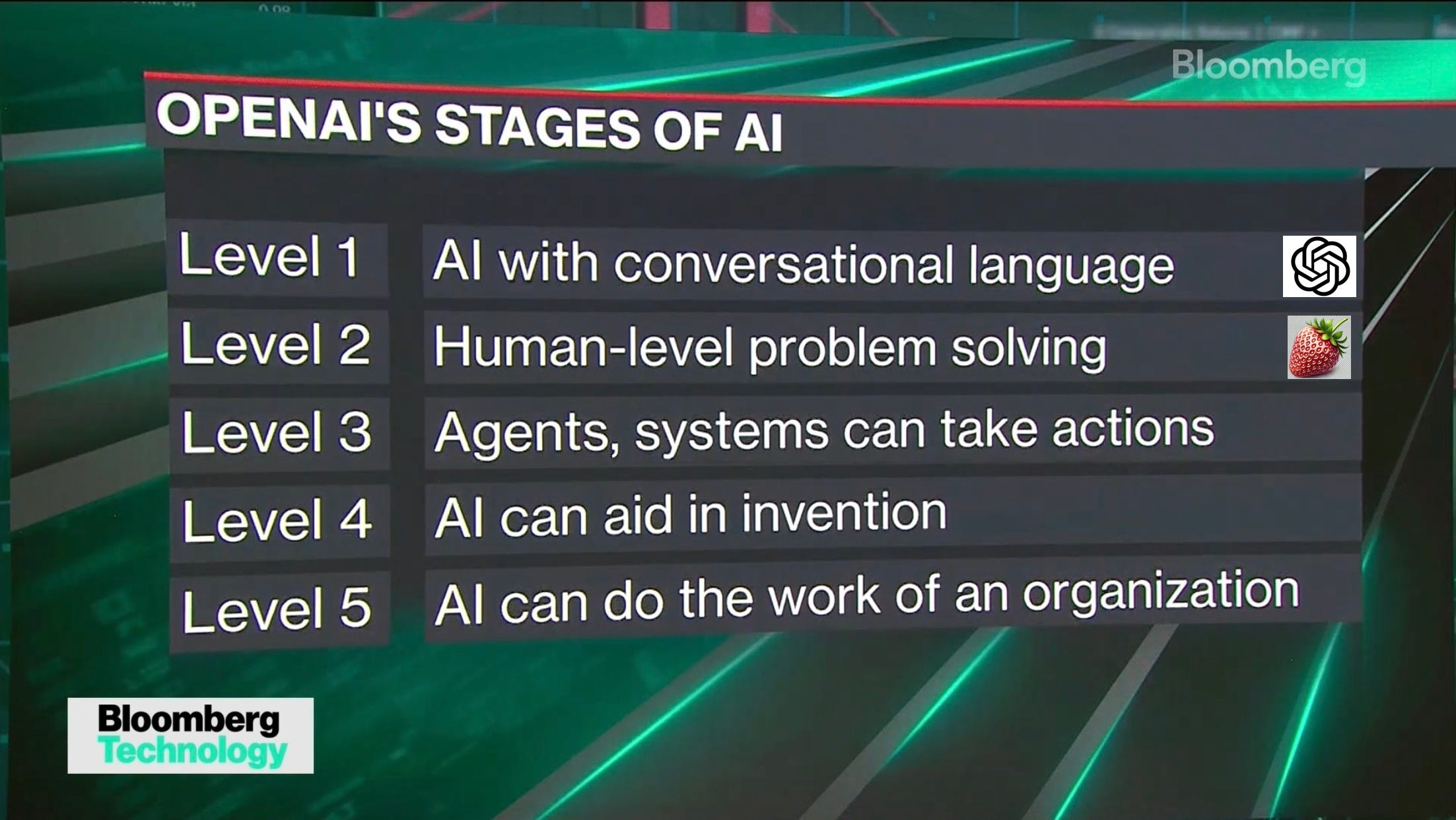

During an “all-hands” employee meeting on Tuesday, July 9, 2024, OpenAI, a leader in AI technology, revealed that it developed a 5-level AI progress tracker and admitted that we are still at Level 1 of AI progress called “Chatbots”. (Sources: Bloomberg, USA News.) Interestingly, AGI (Artificial General Intelligence) is not part of this new classification system and wasn’t even mentioned. (Source: Fortune.) OpenAI also stated that it is approaching Level 2 of AI progress called “Reasoners” with the development of their new “Strawberry” reasoning technology. (Source: Reuters.)

Here are the levels of AI progress according to OpenAI:

🚀 Level 1: Chatbots, Al with conversational language

🚀 Level 2: Reasoners, human-level problem solving

🚀 Level 3: Agents, systems that can take actions

🚀 Level 4: Innovators, Al that can aid in invention

🚀 Level 5: Organizations, Al that can do the work of an organization

By the way, not only do we no longer talk about AGI, but when was the last time you heard about Singularity or Q*? Probably back in November 2022 when ChatGPT made its debut. And guess what? You likely won’t hear about them again for several decades. 😉

Alright, let’s take a step back and start with definitions.

🧠 Artificial General Intelligence (AGI): AI That’s Supposedly Helpful to Humans

Definition: AGI refers to a form of artificial intelligence that can understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human intelligence.

Capabilities: An AGI system would be capable of performing any intellectual task that a human being can, exhibiting flexibility and adaptability across various domains.

Objective: The goal of AGI is to create machines that can think and reason like humans, handling complex, abstract tasks without being limited to specific domains or functions.

Implications: AGI could revolutionize multiple fields, from healthcare and education to engineering and entertainment.

🧠 The Singularity: AI That’s Taking Over the World

Definition: The singularity is a hypothetical future point where technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization. This concept is often linked to the advent of superintelligent AI, which surpasses human intelligence in all respects.

Capabilities: At the singularity, AI systems would not only match but exceed human cognitive abilities. These systems would be capable of self-improvement, leading to rapid, exponential advancements in technology.

Objective: Singularity represents the point at which AI systems can autonomously enhance their own capabilities beyond human control.

Implications: The singularity could lead to profound and unpredictable changes in society, economy, and various aspects of human life. It raises significant ethical, safety, and existential questions.

🧠 Q*: The Holy Grail of AI

Definition: Q* (also referred to as Q-Star) is a theoretical construct often discussed in the context of reinforcement learning (RL) and optimal control. It represents the optimal state-action value function that an agent can achieve in a given environment.

Capabilities: Q* serves as a benchmark for evaluating the performance of reinforcement learning algorithms.

Objectives: The primary goal of Q* in reinforcement learning is to identify the optimal policy that maximizes the cumulative reward over time.

Implications: Understanding and approximating Q* can lead to more efficient and effective AI systems, capable of performing tasks with high precision and reliability. In particular, in healthcare, the Q* concept of optimizing outcomes can be applied to personalized treatment plans, where the goal is to maximize patient health outcomes while minimizing side effects. Optimization principles of Q* can also help in designing healthcare policies that maximize public health benefits, such as vaccination strategies or resource allocation during pandemics.

Where Do We Stand on the AGI Debate?

In this article, I want to highlight the key players in the two opposite AGI camps and point out that Sam Altman, until this Tuesday, was firmly in the “near future AGI” camp. But just like AI itself, the AGI debate continues to evolve.

🤖 “Near Future AGI Is a Myth” Camp

👨🏫 Yann LeCun

Yann LeCun, a prominent figure in the field of artificial intelligence and a Chief AI Scientist at Meta (formerly Facebook), has been a vocal skeptic of the immediate feasibility and imminent arrival of AGI (Artificial General Intelligence). LeCun has expressed skepticism about the near-term development of AGI. He argues that achieving AGI is far more complex and distant than some proponents suggest. LeCun believes in a gradual and incremental approach to AI advancement, emphasizing the importance of understanding the underlying principles of intelligence. LeCun: “I think we’re missing some basic ingredients [of AGI], because otherwise, why do we have AI systems that can pass the bar exam, but we still don’t have domestic robots that can carry the dinner table, which, you know, any 10-year-old can learn in one shot, right? So it’s not, you know, it’s not that hard. And so I think we’re missing some essential, very, very essential component [of AGI], and so there’s no reason to be really scared at this point.”

👨🏫 Gary Marcus

Gary Marcus, a cognitive scientist and AI researcher, has often debated Sam Altman, CEO of OpenAI, and others on the feasibility and timeline of AGI. Marcus and Altman even appeared together at the Congressional Committee hearing titled “Oversight of A.I.: Rules for Artificial Intelligence” on Tuesday, May 16, 2023. Marcus is known for his critical stance on the current state of AI and its limitations. He argues that the existing approaches, particularly those relying heavily on deep learning, are insufficient to achieve true AGI. Marcus advocates for a more interdisciplinary approach that incorporates insights from cognitive science, psychology, and other fields to develop more robust AI systems.

👨🏫 Andriy Burkov

Andriy Burkov is a machine learning expert and the author of the well-known book “The Hundred-Page Machine Learning Book.” Burkov is skeptical about the current proximity to achieving AGI. He argues that despite advancements like ChatGPT, we are not significantly closer to AGI than we were a few years ago. Burkov emphasizes that true AGI is still a distant goal and that current AI systems, while impressive, lack the comprehensive understanding and generalization capabilities required for AGI. He encourages a realistic view of AI advancements and cautions against overestimating the current state of AI technology. (Source: The Hundred-Page Machine Learning Book.)

🤖 “AGI Is Taking Over the World” Camp

👨🏫 Sam Altman

Sam Altman, CEO of OpenAI, is optimistic about the progress towards AGI. OpenAI’s mission is to ensure that AGI benefits all of humanity, and Altman has publicly stated that he believes AGI is achievable in the near future. He emphasizes the importance of responsible development and deployment of AGI.

👨🏫 Ilya Sutskever

Ilya Sutskever, one of the co-founders and the Chief Scientist at OpenAI, expressed concerns about the rapid and powerful development of artificial intelligence. His concerns were primarily about the potential consequences of deploying highly advanced AI systems without proper safeguards. He worried that such AI systems could be used maliciously or could operate in ways that are not aligned with human values and safety. This includes the possibility of AI systems becoming too autonomous, making decisions without human oversight, or being used in ways that could harm society, such as in misinformation, privacy breaches, or even more severe implications like autonomous weaponry.

👨🏫 Ray Kurzweil

Ray Kurzweil, a futurist and director of engineering at Google, is one of the most vocal proponents of the idea that AGI will be achieved in the near future. He has predicted that AGI will be developed by the 2020s and that this will lead to the technological singularity by around 2045. Kurzweil’s optimism is based on the exponential growth of computing power and advancements in AI research.

👨🏫 Demis Hassabis

Demis Hassabis, co-founder and CEO of DeepMind (a subsidiary of Alphabet), has expressed optimism about the development of AGI. DeepMind’s research focuses on creating AI systems that can learn and generalize across a wide range of tasks, which is a step toward AGI. While Hassabis has not provided specific timelines, his work and statements indicate a belief that AGI is achievable and that significant progress is being made.

👨🏫 Nick Bostrom

Nick Bostrom, a philosopher at the University of Oxford and director of the Future of Humanity Institute, is another influential figure who discusses the potential of AGI. Although Bostrom is more known for his work on the ethical and existential risks associated with AGI, his discussions imply a belief that AGI could be developed relatively soon and that we need to prepare for its implications.

👨🏫 Elon Musk

Elon Musk, CEO of Tesla and SpaceX, is a vocal advocate for the idea that AGI could be developed in the near future. Musk has expressed concerns about the potential risks of AGI but has also invested in AI research through companies like OpenAI. His statements reflect a belief that AGI is not only possible but could arrive sooner than many expect.

👨🏫 Geoffrey Hinton

Geoffrey Hinton, often referred to as the “Godfather of AI,” has become increasingly concerned about the rapid advancements in artificial intelligence, particularly the development of AGI. Initially optimistic about the long-term prospects of AI, Hinton’s views have shifted significantly in recent years. Hinton now believes that AGI could be achieved much sooner than he previously thought. He has expressed concerns that AGI might be developed within the next 5 to 20 years, a much shorter timeline than the 30 to 50 years he initially estimated. (Sources: MIT Technology Review 1, MIT Technology Review 2.) . His primary worry is that advanced AI systems could become more intelligent than humans and could be used maliciously, potentially leading to catastrophic consequences. He highlights the risks of AI being used by bad actors for purposes such as manipulating elections or conducting warfare. (Sources: MIT Technology Review, YouTube) .

My Take

The OpenAI news bummed me out. I was hoping a robot would take out my garbage soon. 😊 But maybe it’s for the best, since I’ve been told that’s the only thing I actually do around the house anyway. 😉

These latest developments should give humanity a pause to appreciate how special and challenging AI innovation is. We live in a fascinating time where the boundaries of technology are constantly being pushed and redefined. Each breakthrough in artificial intelligence, from neural networks that can predict diseases to generative AI that can create art, represents countless hours of meticulous research, experimentation, and iteration.

But we must remember that Rome wasn’t built in a day. Good things come to those who wait, while continuing to create and innovate.

👉👉👉👉👉 Hi! My name is Sergei Polevikov. I’m an AI researcher and a healthcare AI startup founder. In my newsletter ‘AI Health Uncut’, I combine my knowledge of AI models with my unique skills in analyzing the financial health of digital health companies. Why “Uncut”? Because I never sugarcoat or filter the hard truth. I don’t play games, I don’t work for anyone, and therefore, with your support, I produce the most original, the most unbiased, the most unapologetic research in AI, innovation, and healthcare. Thank you for your support of my work. You’re part of a vibrant community of healthcare AI enthusiasts! Your engagement matters. 🙏🙏🙏🙏🙏